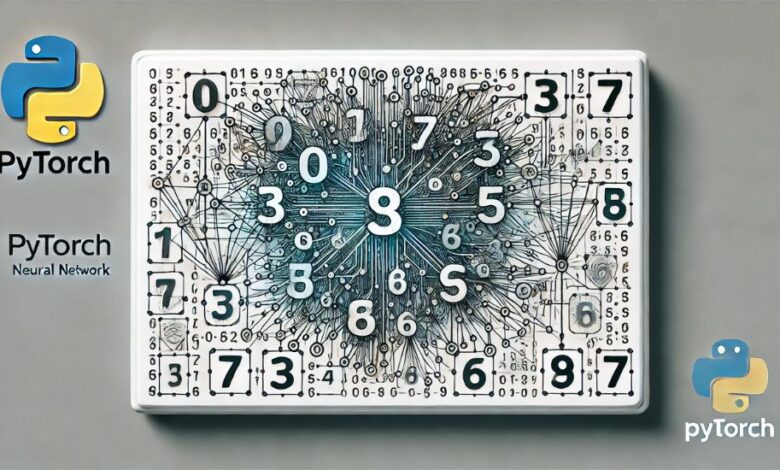

Building a pytorch neural network mnist Classification

The world of artificial intelligence and deep learning is vast, but few projects have made as notable an impact as the pytorch neural network mnist classification. The MNIST dataset has long been a staple for testing machine learning models, serving as a fundamental benchmark for beginners and experts alike. This article by USA Magzines delves into the intricacies of building a neural network in PyTorch, specifically tailored for the MNIST dataset, and guides you through the basics of this crucial deep-learning application.

In this article, we will explore what makes the MNIST dataset ideal for neural network training, discuss PyTorch’s capabilities, and provide an overview of the steps required to create a neural network model in PyTorch. We’ll also cover some best practices, how to improve model accuracy, and the value of using the MNIST dataset in neural network training.

What is the MNIST Dataset?

The MNIST (Modified National Institute of Standards and Technology) dataset is a large collection of 70,000 grayscale images of handwritten digits, ranging from 0 to 9. Each image is standardized to a 28×28 pixel format, which simplifies the complexity of neural network training. This dataset is highly regarded because of its simplicity and structured data, making it an ideal first dataset for experimenting with machine learning and deep learning techniques.

Each digit in the MNIST dataset is represented by pixel values between 0 (black) and 255 (white), allowing for an effective grayscale analysis. The dataset is divided into 60,000 training images and 10,000 test images. By using this dataset, we can train a neural network to recognize handwritten digits, a useful foundation for image recognition tasks in AI.

Why Use PyTorch for Neural Network Development?

PyTorch has become one of the leading frameworks for deep learning development, and it’s especially popular in academic and research settings. Developed by Facebook’s AI Research lab, PyTorch is known for its ease of use, flexibility, and dynamic computation graph, which enables researchers and developers to experiment with models without needing to redefine the entire structure after each modification.

Using PyTorch for building neural networks on the MNIST dataset offers numerous advantages:

- Ease of Use: PyTorch’s syntax is Pythonic and intuitive, allowing developers to focus on model architecture rather than complex configurations.

- Flexibility: It supports dynamic graph computations, meaning we can change our model structure on-the-fly.

- Robust Community Support: With a large and active community, PyTorch has extensive documentation, forums, and tutorials.

- Efficient Computation: PyTorch supports GPU acceleration, making it faster and more efficient for training complex neural networks.

For these reasons, USA Magzines recommends PyTorch as an excellent choice for anyone aiming to create a neural network for the MNIST dataset.

Setting Up the Environment for PyTorch Neural Network MNIST

Step 1: Installing PyTorch

To get started, you’ll need to install PyTorch. Installation instructions can be found on the PyTorch website, tailored to your system specifications and CUDA compatibility. Once installed, make sure to install the necessary dependencies, such as torchvision, which contains utilities and datasets (including MNIST) needed for deep learning in PyTorch.

Step 2: Loading the MNIST Dataset

Loading the MNIST dataset in PyTorch is straightforward due to its presence in the torchvision library. Here’s a quick code snippet to load and preprocess the data:

In this snippet, we normalize the data to center the pixel values around 0, which helps in stabilizing the training process.

Designing the Neural Network Architecture

Creating a neural network in PyTorch requires defining a model class that specifies the layers and forward propagation behavior. For the MNIST dataset, a simple neural network architecture might look something like this:

- Input Layer: Accepts the 28×28 pixel input.

- Hidden Layers: A couple of fully connected layers, typically with ReLU activation functions.

- Output Layer: A layer with 10 nodes, representing the 10 digits, using softmax to output probability distributions.

Here’s an example of a basic PyTorch neural network model for MNIST classification:

This code defines a class MNISTNet, which inherits from nn.Module. It initializes three fully connected layers (fc1, fc2, and fc3) and uses the ReLU activation function to introduce non-linearity. Finally, the forward method specifies how data flows through the network.

Training the Neural Network on the MNIST Dataset

After defining the model architecture, it’s time to train the network. Training involves passing images through the network, calculating the error between the predictions and actual labels, and then adjusting the weights to minimize this error.

- Define Loss and Optimizer: PyTorch provides a variety of loss functions and optimizers. For classification, cross-entropy loss is typically used.

- Training Loop: In each epoch, we iterate over batches of data, perform forward propagation, calculate the loss, backpropagate to update weights, and print the training progress.

Here’s an example of a training loop for our model:

Evaluating Model Performance

Once the model is trained, we need to evaluate its performance on the test data to check its accuracy. Here’s how to evaluate the trained model on the test dataset:

Improving Model Accuracy with Hyperparameter Tuning

Several factors influence model accuracy, including the learning rate, the number of hidden layers, and the size of each layer. Experimenting with these parameters, or hyperparameters, can significantly improve the model’s performance.

Here are a few ways to enhance your PyTorch neural network MNIST classifier:

- Increasing Hidden Layer Size: Adding more neurons in hidden layers can capture more complex patterns in data.

- Using Different Optimizers: Optimizers like Adam can accelerate convergence.

- Experimenting with Learning Rate: A smaller learning rate can yield higher accuracy but may require more epochs to train.

Conclusion

Training a PyTorch neural network on the MNIST dataset is a foundational project for anyone venturing into deep learning. It provides insights into model building, optimization, and evaluation processes critical for more advanced applications. USA Magzines highlights that with PyTorch’s flexibility and MNIST’s simplicity, this project is ideal for mastering the core concepts of neural networks and preparing for more complex datasets and applications.