Understanding PyTorch Overfit Neural Network: Causes, Detection, and Solutions

In this article, we’ll dive into what an pytorch overfit neural network is, why it occurs in the context of PyTorch, how to identify overfitting, and practical solutions to manage it. This in-depth look will help you understand how to keep your models from learning only the training data, a critical step in ensuring they generalize well to unseen data.

Welcome to USA Magzines, where we delve into the intricacies of machine learning and neural networks, bringing you valuable insights and tips for effective modeling.

What is Overfitting in PyTorch Neural Networks?

Overfitting is a scenario in machine learning where a model performs exceptionally well on training data but fails to generalize on new, unseen data. When this happens, the model captures not only the true patterns in the data but also the noise, meaning it memorizes the training examples rather than learning to generalize. PyTorch overfit neural networks, specifically, encounter this issue when they become overly complex or trained for too long without proper regularization techniques.

In PyTorch, this can be a common problem for deep learning models, which have the capacity to learn highly intricate patterns. With too many parameters and no regularization, a PyTorch neural network can easily overfit. Let’s explore what causes this, how to detect it, and how to tackle it.

Causes of PyTorch Overfit Neural Networks

Understanding the root causes of overfitting is essential to prevent it in your PyTorch models. Here are the key factors that lead to overfitting:

1. Complex Model Architecture

One of the main reasons for overfitting is the complexity of the model architecture. Neural networks with a large number of layers and parameters have the capacity to memorize the training data rather than learn generalizable patterns. In PyTorch, creating complex models with ResNet or DenseNet architectures, for instance, can lead to overfitting if the network is too complex for the dataset size.

2. Insufficient Training Data

Another common cause of overfitting in PyTorch neural networks is limited training data. With insufficient data, the model can learn the specific details of each sample rather than general trends. This is particularly problematic in cases where data collection is challenging, expensive, or time-consuming.

3. Lack of Regularization Techniques

Regularization techniques, such as dropout or weight decay, help to reduce the model’s ability to memorize the training data. In PyTorch, these regularization techniques can be implemented easily, but if they are not utilized or are used incorrectly, the model is likely to overfit.

4. Extended Training Time

Training a model for too many epochs without careful monitoring can lead to overfitting. In PyTorch, it is easy to run training loops for hundreds of epochs, which can make the model memorize training data rather than generalize. Monitoring the model’s performance on a validation set is essential to avoid this issue.

How to Detect Overfitting in PyTorch Neural Networks

Detecting overfitting is a critical step before addressing it. Here are some common methods to identify if your PyTorch neural network is overfitting:

1. High Training Accuracy but Low Validation Accuracy

One of the most straightforward ways to detect overfitting is by comparing the accuracy or loss on the training set versus the validation set. A PyTorch overfit neural network will show high accuracy on training data but significantly lower accuracy on the validation data.

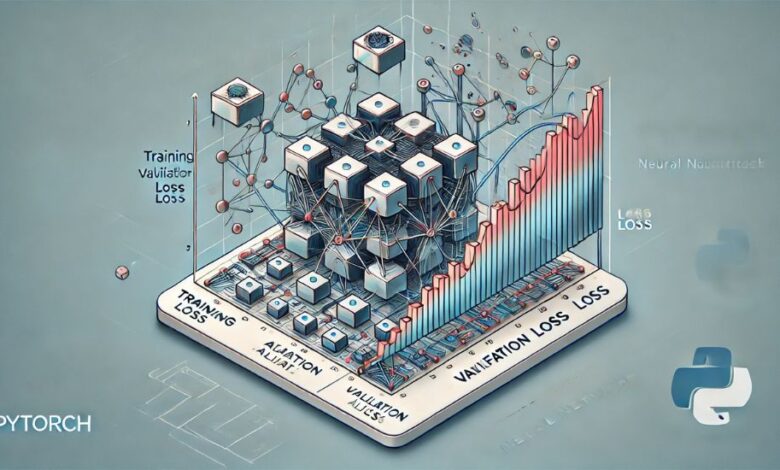

2. Increasing Validation Loss

When training a neural network in PyTorch, if the validation loss starts increasing while the training loss continues to decrease, it is a clear indication of overfitting. This divergence between training and validation losses signifies that the model is learning specific features of the training data that do not generalize.

3. Performance Deterioration on Test Data

The ultimate goal of any model is to perform well on unseen test data. When a model shows high accuracy during training but fails to perform on the test data, overfitting is usually the culprit.

Techniques to Prevent Overfitting in PyTorch Neural Networks

Now that we’ve explored the causes and detection of overfitting, let’s dive into some effective techniques to prevent PyTorch overfit neural networks.

1. Use Dropout Regularization

Dropout is a powerful regularization technique that randomly sets a fraction of the activations in a layer to zero during training. In PyTorch, adding dropout layers to your model is simple. This technique helps to prevent the model from relying too heavily on any single neuron, thereby reducing overfitting.

2. Implement Weight Decay

Weight decay, also known as L2 regularization, penalizes large weights, helping to prevent overfitting. In PyTorch, weight decay can be applied easily by setting the weight_decay parameter in the optimizer.

This penalizes large weights, making the model less likely to memorize the training data, leading to better generalization.

3. Use Data Augmentation

Increasing the size and diversity of your dataset is an effective way to prevent overfitting. In PyTorch, you can use the torchvision.transforms module to apply data augmentation techniques, such as random rotations, flips, or scaling, which make the model more resilient to overfitting.

Data augmentation introduces variability in the data, which helps the model learn more generalized patterns.

4. Early Stopping

Another practical method to prevent overfitting is early stopping. By monitoring the validation loss, you can stop the training process when the validation loss begins to rise. Early stopping can be implemented manually or with libraries that automate this process.

5. Cross-Validation

Cross-validation splits the data into multiple subsets and trains the model on each subset. This technique is less common in deep learning but can still be useful in certain scenarios, especially when using smaller datasets. It ensures that the model is evaluated on multiple folds, making it less likely to overfit to a single subset of data.

6. Batch Normalization

Batch normalization normalizes the inputs to a layer across the batch, which stabilizes learning and allows for higher learning rates. In PyTorch, batch normalization layers can be added easily and help to reduce overfitting by smoothing out the learning process.

Batch normalization is particularly useful in deep networks, as it helps to prevent overfitting in PyTorch models by reducing internal covariate shift.

Practical Example: Managing Overfitting in a PyTorch Neural Network

Let’s consider a practical scenario where we train a neural network on a small dataset in PyTorch. This will help demonstrate the implementation of the techniques mentioned above.

This example demonstrates how to incorporate dropout, batch normalization, and weight decay in a PyTorch neural network to combat overfitting.

Conclusion

Overfitting is a prevalent issue in PyTorch neural networks, particularly in cases where complex architectures or small datasets are used. However, by understanding the causes and using effective techniques like dropout, weight decay, data augmentation, and early stopping, you can significantly reduce overfitting. USA Magzines aims to provide valuable insights into such technical concepts, empowering you to build robust and generalizable models in PyTorch.